Introduction

If you’ve ever tried to set up a deep learning environment from scratch, you know the story: endless dependency conflicts, CUDA driver mismatches, Python version chaos, and hours wasted combing through Stack Overflow posts. For AI/ML enthusiasts, researchers, and developers, these roadblocks often feel like unnecessary gatekeepers between you and actual innovation.

This is where SimplePod.ai steps in. By offering pre-configured AI environments that are ready to launch within minutes, SimplePod.ai eliminates the tedious setup process and puts you directly into your workflow. No more late nights debugging package errors. No more fragile local setups that collapse with a single update. Just pure productivity, powered by GPUs in the cloud.

In this article, we’ll dive deep into how SimplePod’s pre-configured AI environments work, why they matter, and how they can supercharge your workflow—whether you’re a student tinkering with neural networks, a startup prototyping models, or a researcher running large-scale experiments.

The Traditional Setup Pain Points in AI/ML

Before appreciating the solution, it’s worth revisiting the problem.

For anyone working in machine learning, the setup phase can often feel like an initiation ritual. Want to train a model with TensorFlow or PyTorch? Get ready for:

- CUDA and GPU driver headaches – ensuring your GPU drivers align with CUDA versions is notoriously tricky. One mismatch, and you’re stuck troubleshooting for hours.

- Library conflicts – TensorFlow 2.13 might need a different version of NumPy than PyTorch. Add in Hugging Face transformers, SciPy, or scikit-learn, and the puzzle only gets more tangled.

- System dependencies – Python itself often clashes with OS updates, especially on Windows. Even Linux users spend significant time managing virtual environments and containers.

- Wasted time – you wanted to experiment with an idea. Instead, you’ve spent three evenings just setting up the environment.

These friction points kill momentum. For professionals, time is money. For hobbyists, time is passion. In both cases, it’s frustrating to spend more energy wrestling with setup than actually building models.

Introducing SimplePod.ai and Its Promise

SimplePod.ai is a GPU cloud provider designed with AI/ML enthusiasts in mind. Unlike many generic cloud platforms that overwhelm you with options, SimplePod takes a focused, developer-first approach.

- Rent powerful GPUs by the hour – starting as low as $0.05/hour for an RTX 3060 and up to $0.23/hour for an RTX 4090.

- No long-term contracts or hidden fees – pure pay-as-you-go flexibility.

- European-based infrastructure – low latency, high compliance, GDPR-friendly.

- Pre-configured AI environments – so you can skip setup entirely and start coding right away.

The promise is simple: less friction, more flow. Instead of reinventing the wheel each time you want to train a model, SimplePod provides a clean, stable, GPU-accelerated environment tailored for AI and machine learning.

What’s in the Box: Pre-Configured Environments Offered

So, what exactly do you get when you fire up a SimplePod instance? Quite a lot, actually.

Currently, SimplePod offers ready-to-use setups for some of the most popular AI/ML frameworks:

- TensorFlow – with GPU acceleration pre-enabled, so you can jump straight into training CNNs, RNNs, or transformers.

- PyTorch – a favorite among researchers and enthusiasts thanks to its dynamic computation graphs and Hugging Face integration.

- Jupyter Notebook – the go-to interface for exploration, rapid prototyping, and data visualization.

- LLama & OLLama – perfect for enthusiasts experimenting with large language models (LLMs) in the open-source ecosystem.

- Koboldcpp – tailored for enthusiasts who want to run lightweight local LLMs efficiently on GPUs.

Instead of starting with a blank machine and hours of installations, you get an environment that’s already tuned for AI/ML work. Imagine hitting “Start” and within minutes opening a Jupyter Notebook backed by an RTX 4090.

The key here is zero setup. Whether you want to fine-tune a Hugging Face model, train a GAN, or just test out reinforcement learning, the infrastructure is ready and waiting.

How It Works: Launching in Minutes

Launching a pre-configured environment on SimplePod is straightforward, even for beginners.

- Choose your GPU – from cost-effective options like the RTX 3060 to powerhouse models like the RTX 4090.

- Select your environment – TensorFlow, PyTorch, Jupyter, or specialized frameworks like LLama.

- Launch instantly – within minutes, you have a live environment running in the cloud, fully GPU-accelerated.

- Access via browser or SSH – either open Jupyter for quick exploration or connect remotely for advanced workflows.

The dashboard makes it beginner-friendly while still offering API access for power users who want automation.

Compared to larger cloud platforms, the simplicity is refreshing. There’s no labyrinth of services or confusing networking setups—just pick your GPU, pick your environment, and start coding.

Real Developer Gains: Productivity and Flow

Here’s where the magic really happens. Pre-configured environments aren’t just about convenience—they transform the way you work.

- Immediate prototyping – you can spin up a Jupyter notebook in five minutes and start testing your ideas. For researchers, this means faster iteration cycles.

- More time building, less time fixing – instead of spending days troubleshooting CUDA errors, you can spend that time tuning hyperparameters or optimizing architectures.

- Focus on learning and creativity – students and hobbyists don’t have to waste time fighting technical barriers. They can dive directly into neural networks, GANs, or LLM fine-tuning.

- Fewer context switches – nothing derails momentum like dropping into a rabbit hole of dependency issues. With SimplePod, you stay in “flow mode.”

Imagine this: you’re testing a new generative AI model. On a traditional local setup, you’d spend hours installing PyTorch with the right CUDA version. On SimplePod, you pick PyTorch from the environment list and you’re training within minutes.

That’s the difference between tinkering and thriving.

Advanced Customization: Tailoring Environments to Your Needs

Of course, not every project has the same requirements. SimplePod’s pre-configured environments are not rigid—they can be extended and customized.

- Install additional Python packages as needed (e.g., Hugging Face Transformers, spaCy, OpenCV).

- Save and persist your data/code so that your environment feels like “yours,” not just a disposable instance.

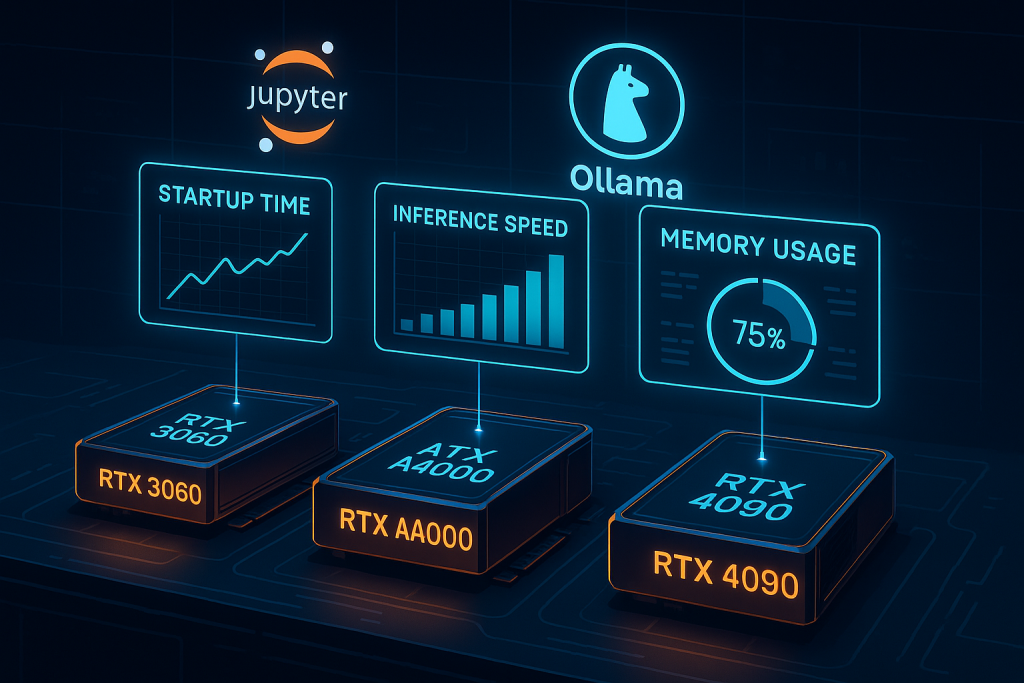

- Scale up or down – switch from a lightweight RTX 3060 to a more powerful RTX 4090 depending on your workload.

This hybrid approach—pre-configured but flexible—strikes the perfect balance. You get the best of both worlds: speed to start, freedom to adapt.

Cost Efficiency and Transparent Pricing

One of the biggest barriers in AI experimentation is cost. Many cloud providers charge opaque fees for storage, bandwidth, or idle time. SimplePod takes a different approach.

- RTX 3060: ~$0.05/hour

- RTX A2000: ~$0.06/hour

- RTX 4090: ~$0.23/hour

These rates are highly competitive, especially when compared to AWS, GCP, or Azure, where GPU costs can be 2–3x higher.

For hobbyists, this means you can tinker with deep learning models without breaking the bank. For startups, it means you can prototype affordably before scaling. For researchers, it means more experiments with the same budget.

And because it’s pay-as-you-go, you never pay for idle time. Start an instance, use it, shut it down, and you’re done.

Ideal Users for This Setup

Who benefits most from pre-configured environments?

- Students – who want to learn AI/ML without drowning in setup issues.

- Researchers – who value faster iteration cycles and transparent pricing.

- Startups – who need to prototype models without committing to expensive infrastructure.

- Freelancers and hobbyists – who want quick access to GPUs without the hassle of building a local rig.

- Educators – who can use SimplePod to provide ready-made environments for classroom teaching.

In short: anyone who values their time and wants to focus on results, not roadblocks.

Conclusion

The AI/ML landscape is moving at lightning speed. Ideas emerge daily, and the faster you can test them, the faster you can learn, innovate, and push the field forward.

SimplePod.ai’s pre-configured AI environments are a powerful enabler of this momentum. They take away the pain of setup, give you access to powerful GPUs, and let you focus on what matters: building, training, and experimenting.

For enthusiasts, this means more nights spent coding neural networks instead of debugging CUDA. For researchers, it means more published results in less time. For startups, it means faster time-to-market.