Introduction

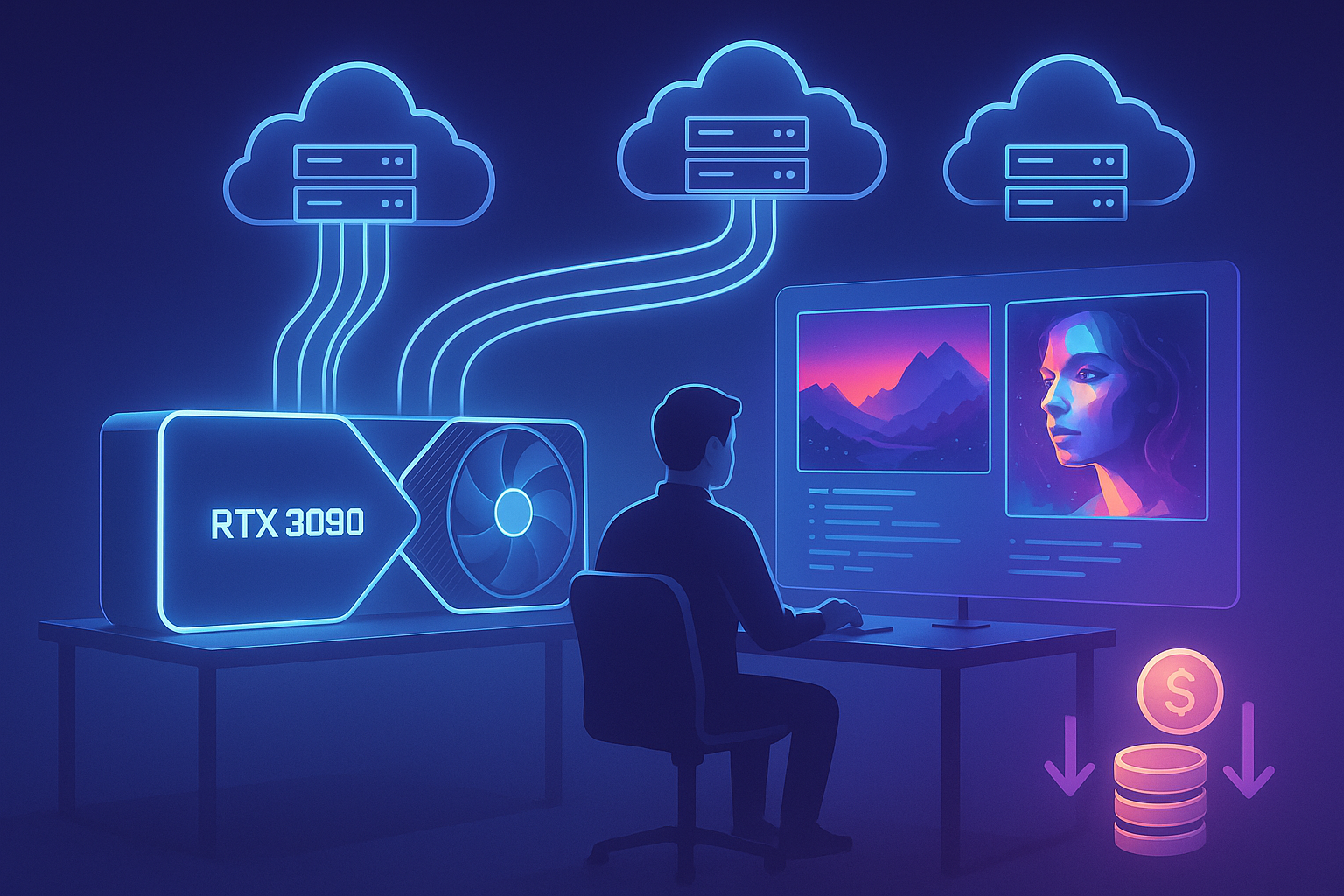

Not every AI workload needs the most expensive GPU. For many projects, the RTX 3090, with its 24 GB of VRAM, offers the perfect balance between power and efficiency.

Whether you’re training diffusion models, generating AI videos, or fine-tuning smaller language models, knowing when the 3090 is “enough” — and when it’s worth stepping up to a 4090 — can save you both time and money.

In this post, we’ll explore where the RTX 3090 shines in the cloud, where it starts to show limits, and how it compares to other GPUs available on SimplePod.

Why the RTX 3090 Still Matters

Even though newer cards exist, the 3090 remains one of the most balanced cloud options on SimplePod.

It delivers:

- 24 GB VRAM — ideal for most AI image, video, and model-training workloads,

- strong tensor performance for FP16/BF16 deep-learning tasks,

- great price-to-performance ratio for creators, startups, and research teams.

Think of it as the workhorse GPU — powerful, stable, and versatile for almost any AI workflow.

Where the RTX 3090 Excels

1. Diffusion and Image Generation

If you’re running Stable Diffusion, SDXL, or ControlNet pipelines, the 3090 is a near-perfect fit.

Its 24 GB VRAM comfortably handles high-resolution outputs (up to 2048 × 2048) and multi-model setups without VRAM errors.

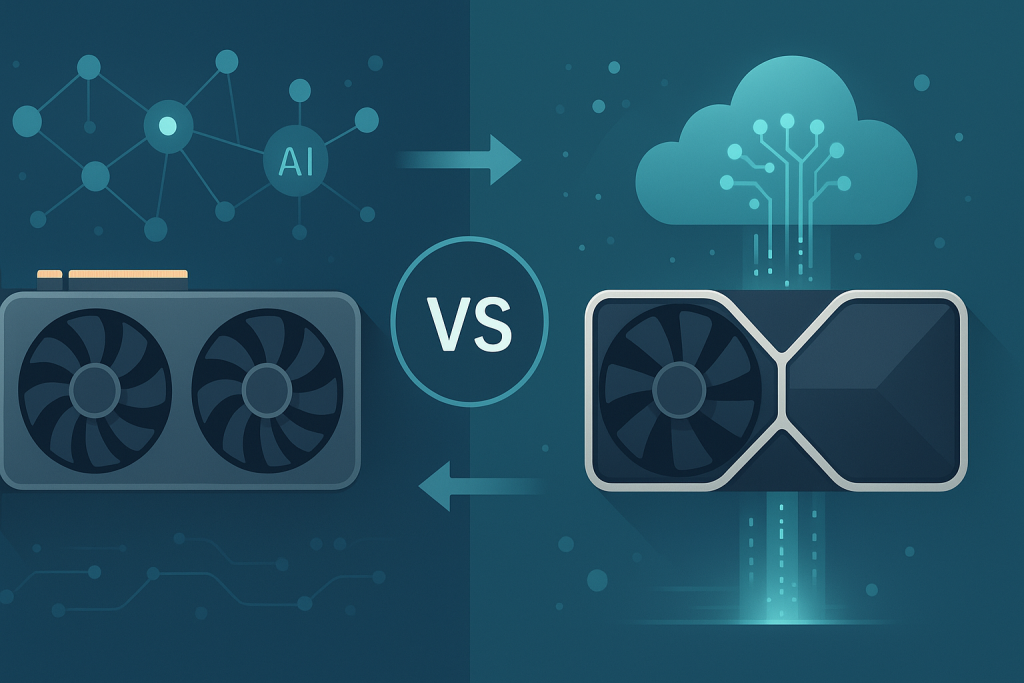

Compared to the RTX 4090, the 3090 performs about 15–25 % slower, but remains a solid and stable option for long-running image-generation tasks.

2. Video Generation and Rendering

For AI-driven video creation (Runway Gen-2, Pika Labs, AnimateDiff) or 3D rendering in Blender, Octane, or Redshift, the RTX 3090 offers excellent throughput.

Its 24 GB of memory provides enough headroom for multi-frame rendering or animation pipelines.

⚙️ If you’re pushing ultra-high-resolution (4K+) or multi-stream workloads, the RTX 4090 will finish jobs faster — but the 3090 remains the safer, more predictable choice for long sessions.

3. Small-to-Medium LLMs and Research Tasks

The 3090 comfortably handles fine-tuning and inference for models like LLaMA 2 7B, Mistral 7B, or Phi-3 Mini.

Its 24 GB VRAM supports gradient checkpointing and quantized training without frequent restarts.

Once you move into 13B+ model territory, you’ll likely need a 4090 or higher-memory GPU, but for startups, indie developers, and educators, the 3090 remains a smart entry point into deep learning research.

Where the 3090 Starts to Struggle

You’ll notice limits when:

- Training or serving very large models that exceed 24 GB VRAM,

- Running batch-heavy inference with high concurrency,

- Demanding enterprise-level latency or continuous multi-GPU scaling.

For most creative and research workloads, though, you’ll rarely hit these bottlenecks.

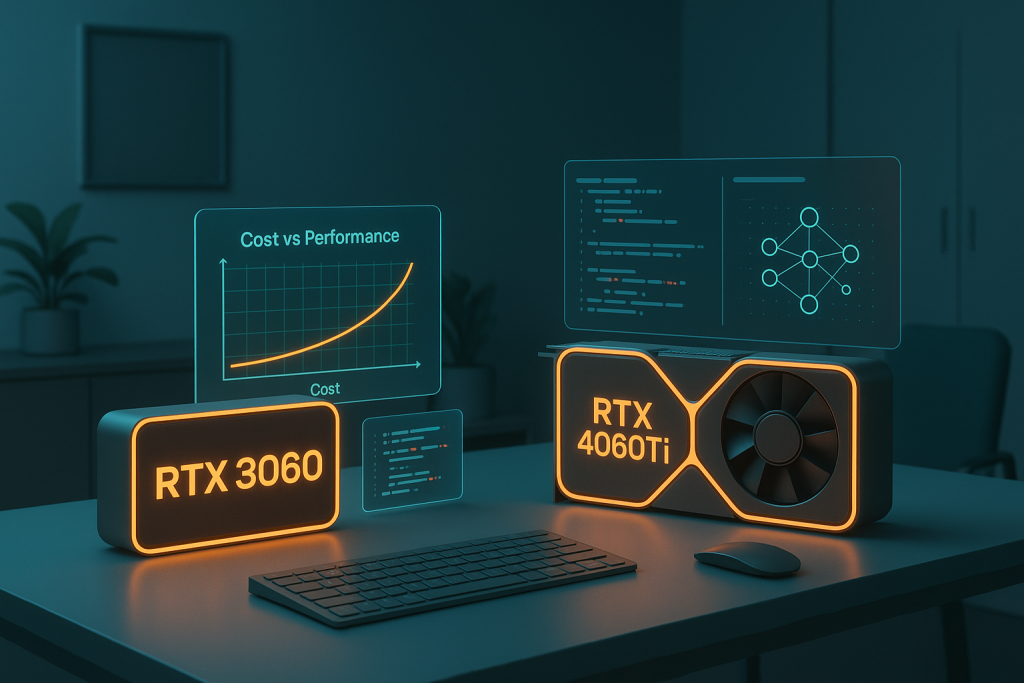

RTX 3090 vs Other SimplePod GPUs

| GPU | VRAM | Ideal Use Case | Notes |

|---|---|---|---|

| RTX 3060 | 12 GB | Entry-level training, smaller diffusion models | Great for quick experiments |

| RTX 3090 | 24 GB | Diffusion, video generation, LLM fine-tuning | Balanced performance and reliability |

| RTX 4090 | 24 GB | High-end AI workloads, faster rendering | ~20 % faster than 3090, better throughput |

💡 Insight:

The RTX 4090 delivers roughly 20 % higher performance, but the 3090 often wins in stability and availability, especially for extended training runs.

Meanwhile, the 3060 is a great low-cost option for lightweight tasks or testing.

How to Maximize the 3090 in the Cloud

- Use mixed precision (FP16) and gradient checkpointing to optimize memory usage.

- Save checkpoints regularly to pause and resume jobs easily.

- Match the GPU to the workload — overpowered GPUs don’t always mean faster results.

- Schedule longer runs during off-peak hours to reduce queue times and latency.

Conclusion

The RTX 3090 remains a top choice for its balance of cost, performance, and stability.

It’s ideal for diffusion models, AI video generation, and small-to-mid LLM training.

If your tasks demand more throughput, the RTX 4090 might offer an edge — but for most AI builders, the 3090 delivers the best mix of power, reliability, and value in the SimplePod cloud.