Introduction

If you’re working on an AI project, one of the biggest questions is: which GPU should you rent in the cloud? On SimplePod, two of the most popular options are the RTX 3060 and the RTX 4090.

Both GPUs are powerful, but they serve very different needs. In this article, we’ll compare them head-to-head in terms of speed, VRAM, power consumption, and hourly cost. We’ll also look at real benchmarks from AI training and inference tasks — from small models to large LLMs1 — so you can decide which GPU gives you the best value for your workload.

1 Large Language Model – a deep learning model with billions of parameters, trained on huge text datasets, capable of generating and understanding human-like text.

Why Compare RTX 3060 vs RTX 4090 on SimplePod?

When you rent a GPU on SimplePod, you pay per hour of usage. Choosing the wrong card means either:

- overpaying for performance you don’t actually need, or

- wasting hours waiting on training because your GPU is underpowered (this means if your GPU is too weak, training takes much longer and you literally spend hours waiting for results that could finish much faster on a stronger GPU).

That’s why it’s worth comparing the RTX 3060 and RTX 4090 side by side. They’re often chosen by different groups: the 3060 for budget-conscious developers and smaller models, and the 4090 for power users working on cutting-edge AI research.

Technical Specifications: The Numbers

| Feature | RTX 3060 | RTX 4090 |

|---|---|---|

| VRAM | 12 GB GDDR6 | 24 GB GDDR6X |

| CUDA Cores2 | ~3 584 | ~16 384 |

| Tensor Cores3 | ~112 | ~512 |

| Memory Bandwidth4 | ~360 GB/s | ~1 008 GB/s |

| Power (TDP5) | ~170W | ~450W |

Key takeaway: The RTX 4090 has double the VRAM and nearly 3× the bandwidth and compute cores. That translates directly into faster training and the ability to handle much larger models.

2 The general-purpose parallel processors inside an NVIDIA GPU.

3 Specialized processors in NVIDIA GPUs designed to accelerate deep learning computations.

4 The rate at which data moves between GPU cores and VRAM. A higher bandwidth means faster data transfer and less chance of bottlenecks.

5 Thermal Design Power – the maximum power draw and heat output a GPU typically produces under full load

Performance: Training vs Inference

Training Performance

Small Models / Small Batch Sizes

If you’re training a small neural network (e.g., a lightweight image classifier or voice model), the RTX 3060 can get the job done. Benchmarks show that the 4090 is typically 1.5–2× faster than the 3060 in these scenarios — noticeable, but not always worth paying 5× the hourly cost if your project is small-scale.

Large Models / Large Batch Sizes

When training larger models like Llama-2, Stable Diffusion XL, or Tortoise TTS6, the RTX 4090 pulls ahead dramatically. In one test, training a Tortoise TTS dataset took ~200 minutes on the RTX 3060 versus just ~36 minutes on the RTX 4090 — more than a 5× speedup.

Even more importantly, the extra VRAM on the 4090 allows you to run larger batch sizes7, which increases throughput and training efficiency. On the 3060, you’ll often hit out-of-memory errors and need to use gradient accumulation8 or offloading — which slows everything down.

6 TTS = Text-to-Speech, an AI model that converts written text into human-like speech. These models are very compute-intensive, which is why the 4090 handles them much better.

7 Batch size – how many training examples are processed in one step. Larger batch sizes = faster training throughput, but they require more VRAM.

8 Gradient accumulation – a method to simulate larger batches on GPUs with limited VRAM. It processes several small batches, accumulates gradients, and then updates once. It allows training larger models on smaller cards (like the 3060), but it slows training down because you need more steps.

Inference Performance

Small Queries / Small Models

For light inference tasks (e.g., serving a chatbot on a 7B9 parameter model), both GPUs can work. But benchmarks show the 4090 generates tokens ~2.5–3× faster than the 3060. If latency matters — for example, in a real-time app — that speed difference is critical.

9 7B – 7 billion parameters (the weights inside a neural network). More parameters generally mean the model is more capable but also requires more memory and compute power.

Large Models / Production Workloads

When serving larger models or handling many parallel requests, the RTX 4090’s extra VRAM and bandwidth become game-changing. It can keep the entire model in GPU memory and serve users with much lower latency.

Some Reddit tests even showed that 4× RTX 3060 cards could outperform a single 4090 on certain parallel workloads. But for single-request performance and large LLMs, the 4090 dominates.

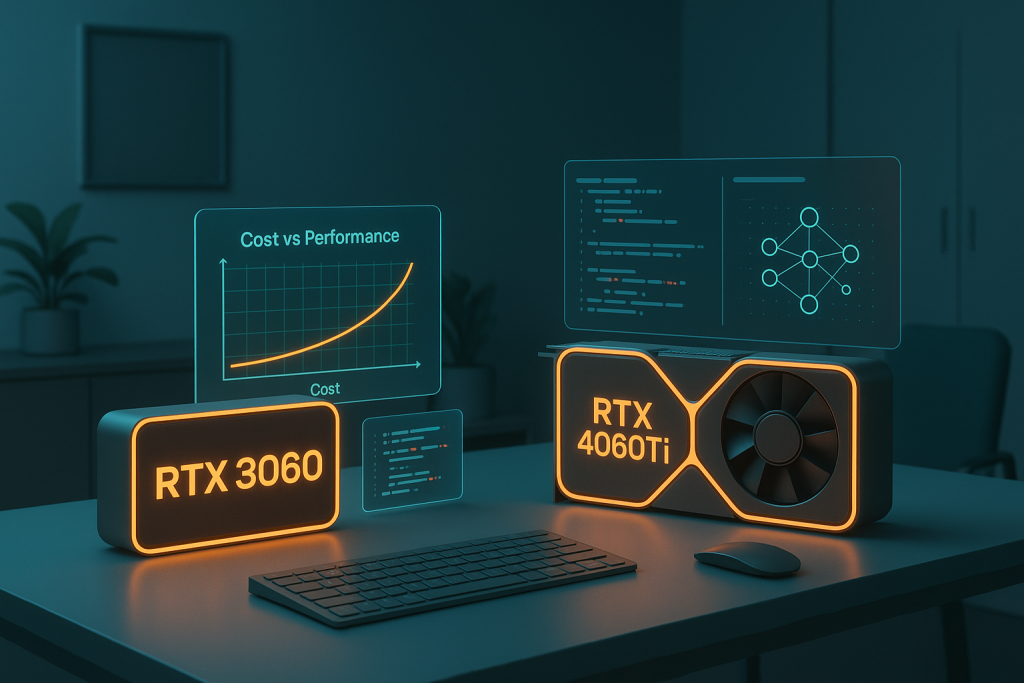

Cost Efficiency: Hourly Rates on SimplePod

On SimplePod, pricing is pay-as-you-go. Let’s assume:

- RTX 3060 costs $0.05/hour

- RTX 4090 costs $0.30/hour

Cost vs Speed

Now the 4090 is 6× more expensive per hour than the 3060. This means:

- If the 4090 is at least 6× faster, then it breaks even or becomes more profitable (you pay more per hour, but you save enough time).

- If the 4090 is only 2–3× faster (typical in many real-world cases), then the 3060 usually gives you much better value per dollar, even though it runs slower.

Example Cost Calculations

| Scenario | RTX 3060 | RTX 4090 | More Cost-Efficient? |

|---|---|---|---|

| Training a small model | 2h × $0.05 = $0.10 | 1h × $0.30 = $0.30 | 3060 |

| Training a large TTS dataset | 200 min (~3.3h × $0.05 = $0.165) | 36 min (~0.6h × $0.30 = $0.18) | Nearly the same, slight edge to 3060 |

| Massive LLM training | 20h × $0.05 = $1.00 | 2h × $0.30 = $0.60 | 4090 |

| 100h inference workload | $5.00 | $30.00 | 3060, unless ultra-low latency is critical |

Power Consumption & Practical Limits

- RTX 3060 is far more power-efficient. If electricity costs are part of your calculation (on-prem vs cloud), this may matter.

- RTX 4090 requires much more power and cooling, but SimplePod handles this for you in the cloud.

- VRAM limitations: The 3060’s 12 GB quickly becomes a bottleneck on models >13B10 parameters. The 4090’s 24 GB gives you breathing room.

10 13B parameters = models larger than 13 billion parameters. These often don’t fit into the 12 GB VRAM of the RTX 3060. The RTX 4090, with 24 GB VRAM, can handle them much more comfortably.

When Should You Choose Each?

When to Rent the RTX 3060

- Budget is your top priority.

- You’re training small models.

- You’re prototyping or experimenting.

- You don’t need low-latency inference.

When to Rent the RTX 4090

- You’re training large models (LLMs, generative AI, multimodal).

- You need low latency inference for production workloads.

- You want to maximize throughput and shorten training time.

- Budget allows for premium performance.

Practical Tips

- Benchmark your own workload: Run a small training job on both GPUs to see real differences.

- Use gradient accumulation on smaller GPUs to fit larger models — but know it slows training.

- Batch size tuning: Larger VRAM = larger batches = better GPU utilization.

- Consider multi-GPU scaling: Sometimes multiple 3060s can outperform a single 4090 for specific parallel tasks.

- Always check SimplePod pricing: Rates can change, and promotions may make premium GPUs more affordable.

Conclusion: RTX 3060 vs RTX 4090 on SimplePod

In the battle of RTX 3060 vs RTX 4090 on SimplePod, there’s no one-size-fits-all answer:

- The RTX 3060 is ideal for budget-friendly projects, prototyping, and smaller models.

- The RTX 4090 shines when working with large AI models, real-time inference, and workloads where time-to-results is critical.

👉 Recommendation: If you’re just getting started or running smaller models, rent the RTX 3060. But if you need serious horsepower for training LLMs or production inference, go with the RTX 4090.

Ready to try it yourself? Rent your GPU on SimplePod today

Check out the available GPUs on SimplePod.ai today, run a quick benchmark on your project, and choose the best fit for your AI workflow.