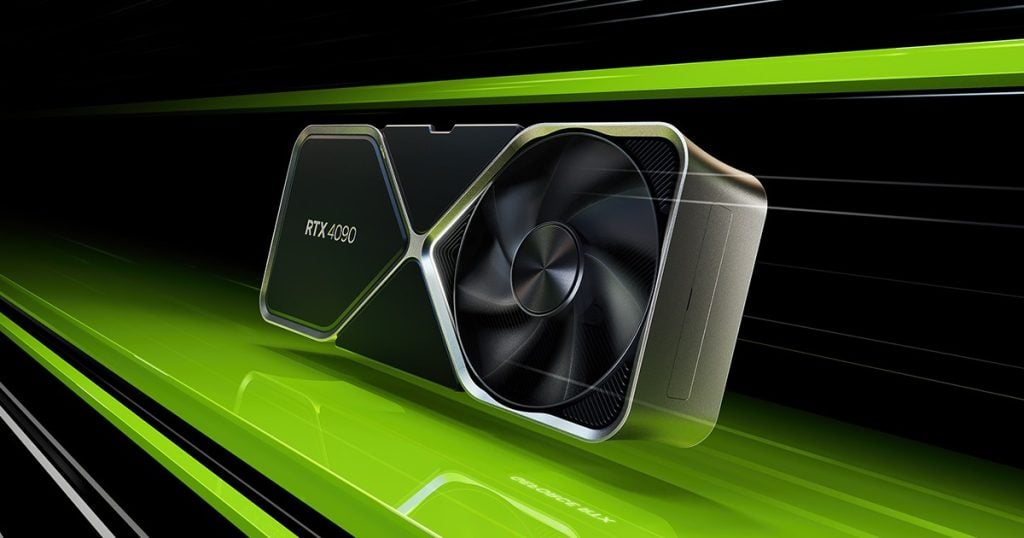

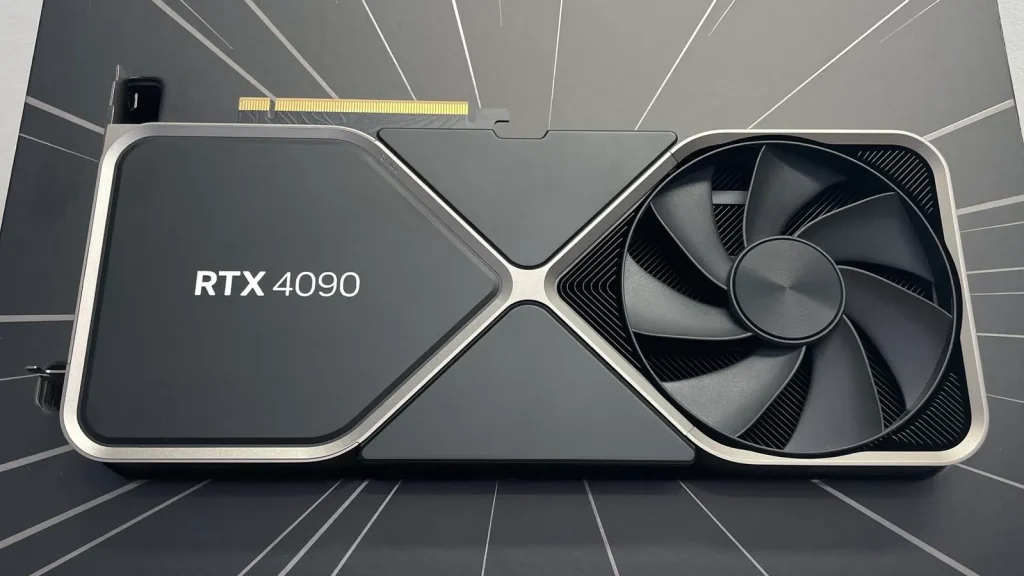

NVIDIA RTX 4090: A Game-Changing GPU for AI Professionals

The NVIDIA RTX 4090 is one of the most powerful consumer-grade GPUs ever released, boasting cutting-edge hardware that caters to AI development, machine learning, and deep learning. With advanced Tensor Cores, immense VRAM capacity, and groundbreaking efficiency, the RTX 4090 is a game-changer for AI applications. However, its high price tag raises questions: Is the NVIDIA RTX 4090 worth it for AI professionals? Should data scientists and researchers consider cloud computing or GPU rental services instead?

This article breaks down the NVIDIA RTX 4090 price, performance benchmarks, and machine learning capabilities to help you decide whether purchasing this GPU is necessary or if GPU rental services present a more viable solution.

Key Features of the RTX 4090

The RTX 4090 is packed with innovations that make it a top choice for AI research and data science:

- 16,384 CUDA Cores – Ensuring faster parallel processing for AI model training and deep learning.

- 24GB GDDR6X VRAM – Ideal for handling massive datasets for machine learning applications.

- 4th Gen Tensor Cores – Supercharges deep learning model training and AI development.

- DLSS 3.0 – Advanced AI upscaling and frame generation.

- High Memory Bandwidth (1,008GB/s) – Enhances data transfer speeds for deep learning computations.

- Power Consumption of RTX 4090 – More efficient than previous generations, optimizing AI workloads.

With these capabilities, the RTX 4090 is an optimal solution for data scientists, AI researchers, and professionals working with complex AI models.

RTX 4090 Performance Benchmarks for AI & Deep Learning

Performance is a key factor when investing in a GPU, and the RTX 4090 does not disappoint. Several benchmark tests highlight its capabilities:

AI Training & Deep Learning Workloads

- TensorFlow & PyTorch Model Training: The RTX 4090 shows a 30-50% improvement over the RTX 3090 in training AI models.

- Natural Language Processing (NLP): Faster text-processing speeds with large transformer models like GPT-3 and BERT.

- Computer Vision Tasks: Enhances object detection, segmentation, and classification with real-time results.

- Reinforcement Learning: Improved computational efficiency in training AI agents for robotics and automation.

- Generative AI & Large Language Models: Faster fine-tuning of models like Llama 2 and Falcon AI.

RTX 4090 vs. RTX 3090 for AI: Which One is Better?

The RTX 4090 vs. RTX 3090 for AI debate is ongoing, but clear performance improvements make the newer model superior:

- Higher VRAM and Tensor Core Performance: The RTX 4090 features 24GB of GDDR6X VRAM, compared to RTX 3090’s 24GB but with less efficient bandwidth.

- More CUDA Cores: 16,384 cores on the RTX 4090, compared to 10,496 cores on the RTX 3090.

- Better Power Efficiency: Improved power efficiency reduces operating costs for long training sessions.

For AI researchers and data scientists, the RTX 4090 is the better long-term investment due to enhanced deep learning and machine learning capabilities.

RTX 4090 Price & Availability

The NVIDIA RTX 4090 price varies by region, retailer, and demand. As of 2025, its average cost is between $1,599 and $2,500, depending on market conditions.

Factors Influencing RTX 4090 Price:

- Market demand & stock shortages – Limited availability drives up costs.

- Import taxes & regional differences – Prices fluctuate based on location.

- Scalper resale prices – Inflates market rates beyond MSRP.

- Technology advancements – Future GPU releases could impact pricing trends.

With fluctuating prices and limited RTX 4090 availability, cloud-based GPU rental is an increasingly viable option for AI professionals.

Why GPU Rental and Cloud Computing Are the Better Choice

Purchasing an RTX 4090 requires a significant financial commitment, but cloud-based solutions provide access to the same level of computing power without the high upfront investment.

Advantages of GPU Rental Services

- Lower Costs: Pay only for the GPU power you need, avoiding high upfront expenses.

- Scalability: Scale computing resources up or down as project requirements change.

- No Maintenance: Avoid costs associated with power consumption and hardware degradation.

- Access to Enterprise-Grade GPUs: Cloud platforms provide access to NVIDIA A100, H100, and other high-performance GPUs beyond what the RTX 4090 offers.

SimplePod: The Best GPU Rental Solution

If you’re looking for an efficient and cost-effective way to rent GPUs, SimplePod provides an excellent solution. SimplePod offers high-performance cloud-based GPU rental services tailored for AI development, deep learning, and machine learning professionals.

Why Choose SimplePod?

- On-Demand Access – Get immediate access to high-end GPUs, including RTX 4090.

- Flexible Pricing – Pay for only what you use with transparent and affordable pricing.

- Seamless Integration – Easily integrate with major AI frameworks like TensorFlow, PyTorch, or Stable-Diffusion.

- High Reliability – SimplePod ensures minimal downtime and high availability for mission-critical AI tasks.

- Optimized for Data Scientists – Designed for professionals working with large datasets, AI model training, and deep learning applications.

By leveraging SimplePod’s cloud infrastructure, AI professionals can eliminate the burden of hardware maintenance while benefiting from state-of-the-art GPU performance.

Expanding the Use Cases of RTX 4090 for AI Professionals

Beyond traditional AI research, data scientists and machine learning engineers are utilizing the RTX 4090 for various applications:

- Advanced AI Image Recognition & Video Processing – Training complex CNNs for real-time object detection.

- Autonomous Systems & Robotics – AI-driven robotics simulations powered by cloud GPUs.

- Medical AI & Drug Discovery – Utilizing deep learning for medical imaging analysis and bioinformatics research.

- Smart Cities & IoT AI Models – Powering intelligent infrastructure management through AI.

- Algorithmic Trading & AI-driven FinTech – High-frequency trading models enhanced by deep learning capabilities.

- Scientific Computing & Big Data Processing – Handling large-scale computational research with ease.

Conclusion

While the RTX 4090 is a powerful GPU, its high cost makes it an impractical choice for many AI professionals, machine learning engineers, and data scientists. Instead of committing to an expensive hardware purchase, GPU rental services like SimplePod provide a cost-effective, scalable, and maintenance-free alternative.

Final Takeaways:

- RTX 4090 performance benchmarks prove it is an excellent choice for deep learning and AI research.

- AI development with RTX 4090 is highly efficient but expensive, making GPU rental services a cost-effective alternative.

- Machine learning capabilities of RTX 4090 are optimal for data scientists requiring high processing power.

- Power consumption of RTX 4090 is optimized, but cloud GPUs offer better long-term cost savings.

- RTX 4090 for data scientists is an excellent option, but cloud GPUs provide more flexibility and affordability.

FAQs

- Is the RTX 4090 good for AI development?

Yes, the RTX 4090 is one of the best consumer-grade GPUs for AI development, offering high CUDA core counts, Tensor Core enhancements, and ample VRAM for deep learning. - What is the power consumption of the RTX 4090?

The RTX 4090 has a TDP (Thermal Design Power) of 450W, making it power-efficient compared to its performance capabilities. - How does the RTX 4090 compare to the RTX 3090 for AI?

The RTX 4090 outperforms the RTX 3090 in CUDA cores, VRAM bandwidth, and power efficiency, making it better for AI workloads. - Is the RTX 4090 worth it for data scientists?

Yes, data scientists working with large-scale AI models, deep learning, and machine learning will benefit from the RTX 4090’s high processing power. - Can I rent an RTX 4090 instead of buying it?

Yes, cloud-based GPU rental services like SimplePod offer on-demand RTX 4090 instances, eliminating the need for expensive hardware purchases.