Introduction

Running large language models (LLMs) locally gives developers the freedom to experiment, customize, and maintain privacy. Ollama is a powerful command-line interface that allows for local LLM deployment with minimal setup. However, many developers lack access to the high-performance GPUs required for smooth operation. This is where SimplePod.ai steps in.

SimplePod.ai offers on-demand GPU rentals tailored to AI/ML workflows, with preconfigured environments that allow developers to launch local LLMs using Ollama within minutes. In this guide, we explore how to deploy Ollama using rented GPUs on SimplePod.ai, from selecting the right instance to running and customizing models like LLaMA, Mistral, and more.

1. Why Combine Ollama with SimplePod.ai GPU Rental?

Affordability and Accessibility

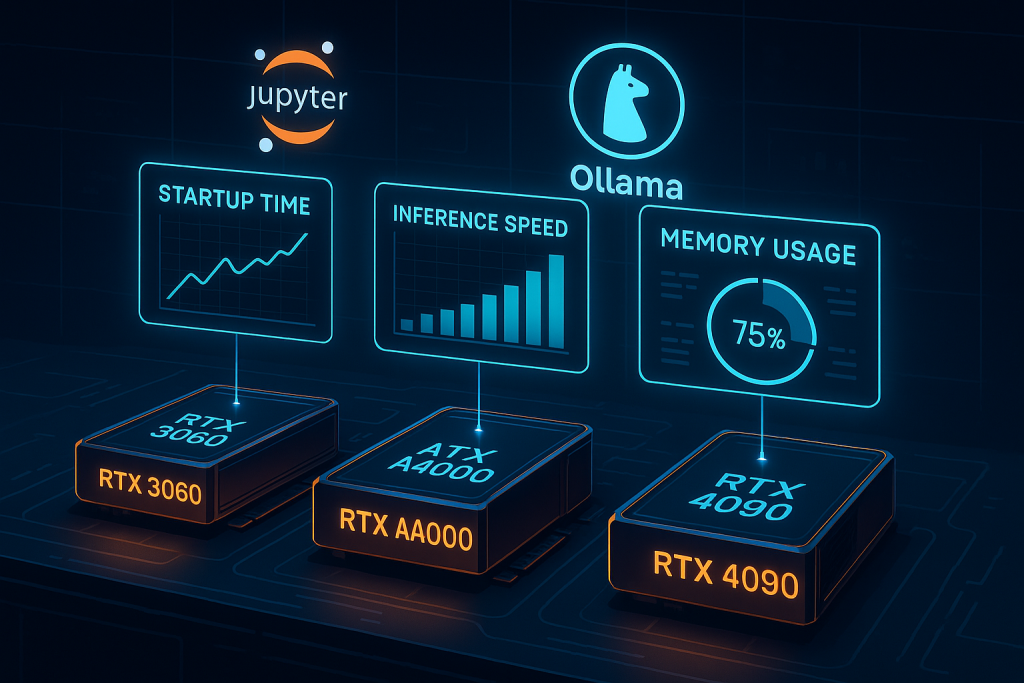

SimplePod.ai provides GPU rentals starting at just $0.05 per hour. Entry-level GPUs such as RTX 3060 and high-end models like RTX 4090 are available, enabling cost-effective experimentation and production-level workloads without the need to purchase physical hardware.

Ready-to-Use Environments

SimplePod.ai offers preconfigured templates like the “Ollama GPU Instance.” These images include all necessary drivers and a compatible Ubuntu environment. Users can deploy and begin interacting with Ollama almost instantly.

Usage Monitoring and Cost Control

With built-in dashboards for system resource monitoring, developers can track GPU, CPU, and memory usage. The platform also allows instances to be paused or stopped at any time to avoid unnecessary billing.

Persistent Storage

SimplePod.ai includes persistent storage options with high-speed connectivity, allowing users to save models, scripts, and results between sessions without data loss.

Trusted by the Community

Developers report consistent performance and reliability from SimplePod.ai. The availability of prebuilt environments and transparent pricing makes it a go-to choice for many AI/ML projects involving local inference.

2. Step-by-Step: Renting a GPU and Installing Ollama

Selecting a GPU Instance

After signing in to SimplePod.ai, navigate to the “How to Rent” section. Choose an instance template labeled “Ollama GPU Instance” or manually select your GPU based on the model you plan to run. For example, quantized 7B models require around 8 GB of VRAM, while larger models such as 13B or more benefit from 16 GB or greater.

Provisioning the Instance

Select the desired GPU and the Ollama-ready template. Click “Run” and wait a few minutes for the environment to launch. This template includes Ubuntu, Docker, NVIDIA drivers, and in some cases, Ollama preinstalled.

Accessing Your Environment

After deployment, you can use the built-in terminal or Jupyter Notebook interface. This makes it easy to run commands, develop Python scripts, and interact with models.

Installing Ollama (If Needed)

If Ollama is not preinstalled, open your terminal and run the following command:

curl -fsSL https://ollama.com/install.sh | sh

After installation, verify it with:

ollama –version

The software installs quickly and supports Ubuntu and other Linux distributions.

3. Running Your First Ollama Model

Pulling a Model

Begin by listing available models:

ollama list

Then pull a specific model:

ollama pull llama2:7b

Other supported models include Mistral, Gemma, Vicuna, and CodeLlama. Choose a version that matches your GPU’s capabilities.

Starting an Interactive Session

Use the following command to interact with the model:

ollama run llama2:7b

Once running, type a question or prompt. For example: “What is reinforcement learning?” Exit the session by typing /bye or pressing Ctrl+D.

Running Ollama as a Local Server

To make Ollama accessible via REST API, use:

ollama serve

You can now send requests to:

http://localhost:11434/api/generate

Post a JSON payload like:

{

“model”: “llama2:7b”,

“prompt”: “Define overfitting in machine learning.”

}

Expose this endpoint externally by modifying environment variables and opening port 11434.

4. Using SimplePod.ai Features with Ollama

Monitoring and Controls

SimplePod.ai provides dashboards for live monitoring of GPU, CPU, and memory usage. You can control your instance directly from the dashboard and shut it down when idle to reduce costs.

Storage and File Persistence

Save your downloaded models, scripts, and logs in the persistent storage volume. Files are preserved across sessions, and data transfer speeds are optimized for AI workloads.

Development Workflows in Jupyter

For a more visual experience, use Jupyter Notebook. Write and run Python scripts that interact with Ollama through its CLI or REST interface. This is particularly useful for prototyping or educational use.

5. Customizing Models with Modelfiles

Ollama allows for full customization through Modelfiles. These text-based configuration files set parameters and define system-level instructions.

Example:

FROM llama2:7b

PARAMETER temperature 0.5

SYSTEM “””

You are a helpful assistant for data scientists working with AI models.

“””

Create and run your custom model with:

ollama create -f aiassistant Modelfile

ollama run aiassistant

This enables tailored personas and specific response behavior.

6. Advanced Features and Integration

Docker Integration

For advanced users, Ollama can run inside a Docker container with GPU access:

docker run -d –gpus all -p 11434:11434 \

-e OLLAMA_HOST=0.0.0.0 \

–name ollama_server ollama/ollama

You can then use the REST API as usual.

Python and LangChain Integration

Use Ollama’s REST API in Python scripts or integrate with LangChain’s Ollama class for automated, prompt-based pipelines. This is ideal for applications like data extraction, summarization, and AI-driven workflows.

7. Best Practices and Troubleshooting

Model Size Selection

Check GPU specs before selecting a model. Use quantized versions when available. A 7B quantized model works well with 8 GB VRAM; 13B or larger models typically need 16 GB or more.

Billing Management

Stop or pause your instance when not in use. SimplePod.ai bills hourly, so managing usage directly impacts cost.

Common Issues and Fixes

- If the API endpoint is not responding, check your port mappings and environment settings.

- Restart Ollama if the model fails to load.

- Switch to a smaller model or quantized version for better performance on entry-level GPUs.

Performance Tips

Use the ollama serve idle-unloading setting and minimize context window size to improve memory efficiency and speed.

8. Real-World Project Ideas

- Chatbot Development: Create a private, local assistant trained on internal data.

- Code Generation: Use CodeLlama for generating boilerplate and functional code.

- Document Summarization: Use models to summarize long PDFs or transcripts.

- Research Assistant: Integrate with LangChain to create an AI agent that answers technical questions.

- REST API Deployment: Build a lightweight back-end for a web app that leverages LLM power.

All of these can be launched from a rented SimplePod.ai GPU instance, using Ollama as the model engine.

9. Summary

Combining Ollama with GPU rentals from SimplePod.ai unlocks a powerful, private, and cost-effective workflow for anyone building with large language models. With prebuilt environments, fast storage, real-time monitoring, and flexible pricing, SimplePod.ai makes it easy to scale without investing in expensive hardware.

Whether you’re experimenting with LLaMA, building custom assistants, or integrating LLMs into production workflows, Ollama provides a flexible runtime—and SimplePod.ai provides the infrastructure to support it.

Get started today by creating a SimplePod.ai account, launching an Ollama-ready instance, and pulling your first model. The future of private, local LLM development is now.

FAQs

What is Ollama?

Ollama is a command-line tool that allows developers to run large language models locally using a simple interface. It supports both chat and REST API modes.

Can I use Ollama with a rented GPU from SimplePod.ai?

Yes. SimplePod.ai offers Ollama-ready GPU instances with all required dependencies preinstalled or installable via a one-click template.

How do I install Ollama on a GPU instance?

Use the official script:

curl -fsSL https://ollama.com/install.sh | sh

What size GPU do I need?

7B quantized models require around 8 GB VRAM. For 13B models or more, use a 16 GB+ GPU like RTX A4000 or RTX 4090.

What are the hourly costs?

Costs vary by GPU: RTX 3060 is around $0.05/hr, RTX A4000 around $0.09/hr, and RTX 4090 up to $0.30/hr.