Introduction

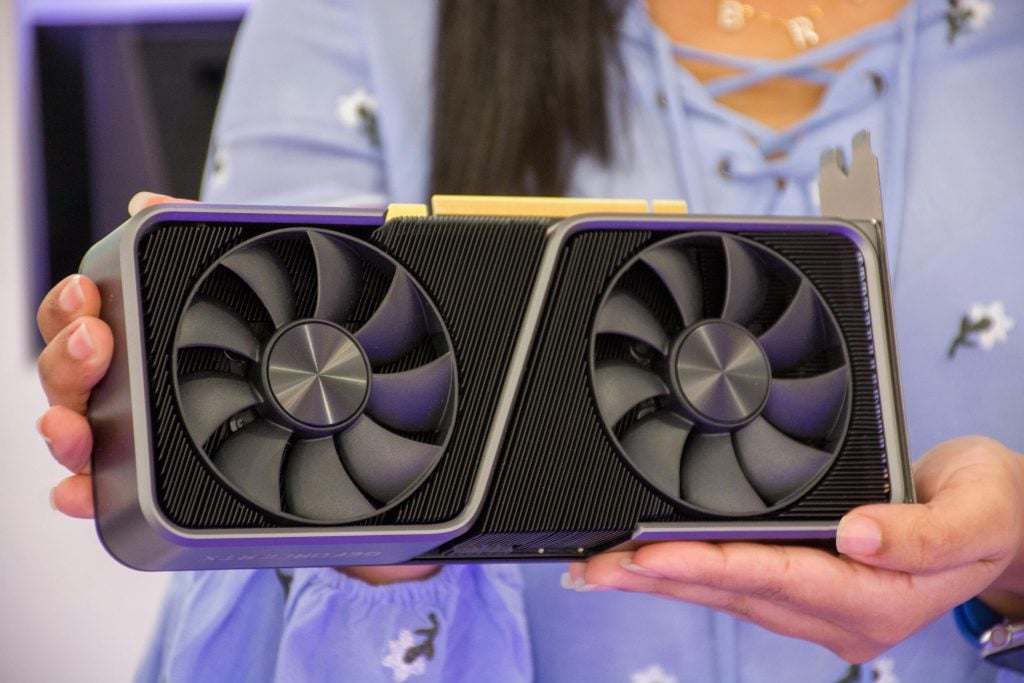

If you’re diving into artificial intelligence (AI) or machine learning (ML), you’ve likely heard about the importance of GPUs. Graphics Processing Units (GPUs) are the powerhouse hardware behind today’s AI boom.

But what exactly makes a GPU so special for ML tasks? And how do cloud GPU services let you tap into that power without owning expensive hardware?

In this beginner-friendly guide, we’ll break down the key concepts of cloud GPUs – from understanding what a GPU does, to essential terms like VRAM, CUDA, memory bandwidth, and how cloud providers offer GPU muscle on demand. By the end, you’ll have a solid grasp of cloud GPU basics and how they turbocharge AI and ML workflows.

What is a GPU and Why Does AI Need It?

A Graphics Processing Unit (GPU) is a specialized processor originally designed to accelerate graphics rendering. Unlike a CPU (Central Processing Unit) that has a few cores optimized for sequential serial processing, a GPU contains hundreds or thousands of smaller cores optimized for handling many tasks in parallel.

This parallel architecture makes GPUs extremely efficient at the linear algebra computations that underlie neural networks and other ML algorithms. In practical terms, training a deep learning model that might take days or weeks on a CPU can often be done in a matter of hours on a modern GPU thanks to this parallelism.

GPU vs CPU: Built for Different Tasks

GPUs aren’t “smarter” than CPUs; they’re just built differently. A CPU is like a sharp-minded problem solver tackling one thing at a time at high speed, ideal for complex logic and diverse tasks.

A GPU is more like an army of workers tackling many simple math problems at once. For example, in a neural network, a GPU can calculate thousands of neuron operations simultaneously – something a CPU would have to do mostly one-by-one.

This is why tasks like image recognition, language translation, or any large-scale matrix math run dramatically faster on GPUs. GPUs accelerate AI by processing huge batches of calculations in parallel, a perfect fit for the math-heavy world of ML.

VRAM: The Memory Behind the Magic

When working with GPUs, you’ll often see the term VRAM (Video Random Access Memory). This is the dedicated memory on the graphics card that stores data for the GPU to process – think of it as the GPU’s local workspace.

In AI terms, VRAM holds your neural network’s parameters (weights), activations, and the batch of input data (like images or text sequences) being processed. The more VRAM you have, the larger models and batch sizes you can work with without running out of memory.

Understanding Memory Requirements

If you’ve tried to train a model and hit an “out of memory” error, it likely means your GPU’s VRAM was insufficient for the task. High-end GPUs might have 16 GB, 32 GB, or even more VRAM, allowing them to handle very large neural networks or high-resolution data.

VRAM is crucial because if your model doesn’t fit in GPU memory, it can’t be processed entirely on the GPU. This could slow down training dramatically as data shuffles in and out from system RAM. When selecting a GPU for ML, memory size is often as important as raw compute power.

Memory Bandwidth: Feeding the Beast

Equally important is memory bandwidth – the speed at which data can move between the GPU processor and its VRAM. Imagine a race car (the GPU cores) that can run at top speed, but it’s stuck waiting for fuel deliveries – that’s a GPU with insufficient memory bandwidth.

Memory bandwidth (measured in GB/s) determines how quickly data flows, ensuring those hundreds of GPU cores stay busy crunching numbers instead of idling. Many modern GPUs boast memory bandwidth in the hundreds of gigabytes per second (GB/s).

For example, a single GPU might advertise 400+ GB/s – to keep its cores fed with data. In short, ample VRAM and high memory bandwidth together allow a GPU to chew through data-heavy AI tasks efficiently.

CUDA: Unlocking GPU Computing Power

You might have come across the term CUDA when exploring GPUs for AI. CUDA (Compute Unified Device Architecture) is NVIDIA’s platform for parallel computing on their GPUs.

In simpler terms, it’s a software layer that lets programmers use the GPU for general-purpose computing (not just graphics). When people talk about “CUDA cores,” they’re referring to the GPU’s parallel processing cores that can be programmed through CUDA.

CUDA in Practice

For AI practitioners, you don’t usually write raw CUDA code (unless you’re developing custom GPU kernels). Instead, frameworks like TensorFlow and PyTorch use CUDA under the hood to execute your model’s operations on the GPU.

All you need is the proper NVIDIA driver and CUDA library installed, and these frameworks will automatically utilize the GPU to accelerate math operations. The term CUDA is often used interchangeably with GPU acceleration in ML contexts.

An “NVIDIA CUDA GPU” essentially means an NVIDIA graphics card capable of running GPU-accelerated computations for your ML code.

NVIDIA’s CUDA has become a standard in deep learning because it’s widely supported and optimized. There are alternatives (like OpenCL, or Apple’s Metal for their GPUs), but if you’re using popular deep learning libraries on NVIDIA hardware, CUDA is doing the heavy lifting behind the scenes.

Cloud GPU Services: AI Computing On-Demand

Now that we’ve covered what GPUs can do, a key question arises: Do you need to buy an expensive GPU card to benefit from this power? Thanks to cloud computing, the answer is no.

Cloud GPU services allow individuals and companies to rent GPUs by the hour (or second), giving on-demand access to high-end graphics cards in remote data centers. This is transformational for AI development because you can scale your compute power to your needs without huge upfront investment.

How Cloud GPUs Work

Cloud providers like Simplepod.ai offer virtual machines equipped with GPUs. This model is incredibly flexible. If you have a short-term project that needs a burst of compute – say, training a model for a few days – you can rent multiple GPU machines to speed it up, then shut them down when done.

No need to maintain or pay for hardware when you’re not using it. Cloud GPUs also lower the barrier for enthusiasts; anyone with an internet connection and a credit card (and sometimes even free trial credits) can experiment with training neural networks on serious hardware.

Benefits of Using Cloud GPU Services

- Scalability and Flexibility: Need more compute? Launch more GPU instances. Cloud platforms let you scale up to multiple GPUs or even clusters of machines for large training jobs.

- Cost-Effective Solution: For occasional needs, renting is cheaper than buying. You avoid the large upfront cost of a GPU (which could be thousands of dollars for top models) and the ongoing costs of electricity and maintenance.

- Access to Latest Hardware: Cloud providers often offer cutting-edge GPUs that you might not afford personally. You get to leverage latest tech as soon as it’s available.

- No Maintenance Hassles: The cloud provider handles hardware setup, driver installation, cooling, hardware failures, etc. You focus on your ML code, not on building and maintaining a GPU rig.

- Geographic Flexibility: You can choose data center regions close to you or your data source to reduce latency. This is useful if you’re serving AI models to users around the world.

Practical Tips for Getting Started with Cloud GPUs

Getting started with cloud GPUs doesn’t have to be complicated—especially when using a streamlined platform like SimplePod.ai Whether you’re training deep learning models, running AI workloads, or experimenting with machine learning, SimplePod makes GPU computing accessible and efficient. Here’s how to get started:

1. Choose the Right GPU Instance on SimplePod.ai

Instead of navigating complex cloud provider setups, SimplePod.ai offers an easy-to-use interface where you can select the GPU instance that best suits your needs. No hidden costs, no overcomplicated configurations—just straightforward, on-demand access to powerful GPUs.

2. Set Up Your ML Environment in Seconds

Unlike traditional cloud providers where you need to install drivers, configure dependencies, and troubleshoot compatibility issues, SimplePod.ai comes pre-configured with all the essential AI/ML libraries.

3. Effortless Data Management

When working with large datasets, slow transfers can be a bottleneck. SimplePod provides fast and seamless data access, ensuring your AI workloads run efficiently.

4. Monitor and Optimize GPU Performance

Maximize efficiency by tracking GPU usage in real-time with built-in monitoring tools on SimplePod.ai.

5. No Unnecessary Costs—Shut Down with One Click

A major advantage of SimplePod.ai over traditional cloud providers is cost efficiency. With other platforms, it’s easy to forget a running instance and rack up unexpected charges.

Conclusion

Cloud GPU technology has opened up incredible possibilities for AI and machine learning enthusiasts and professionals alike. We’ve demystified the basic terminology – from understanding how GPUs differ from CPUs, to the role of VRAM and memory bandwidth in ensuring your GPU runs at full throttle.

We also touched on CUDA, the magic sauce enabling all the popular AI frameworks to leverage GPU power, and saw how cloud services put all this hardware at your fingertips on demand.

With these fundamentals in hand, you’re well on your way to accelerating your own AI projects. Whether you’re training a simple model on a single GPU or scaling out a complex deep learning experiment on a fleet of cloud GPUs, knowing these key concepts will help you make informed decisions and troubleshoot issues like a pro.

AI thrives on computation, and now you understand the core pieces of the GPU puzzle that make that possible. Happy experimenting with your cloud GPUs, and keep pushing the boundaries of what you can build in the world of machine learning!